高性能机器学习实验室专注于利用高性能计算技术开发快速且资源高效的机器学习算法。我们的研究主要集中在两个领域:

- 高性能机器学习系统

- 高效的机器学习算法

实验室与香港科技大学(广州)高性能计算平台团队紧密协作,依托先进的计算资源,支持我们开展具有前沿性和挑战性的研究工作。

实验室目前已获得多项具有竞争力的科研资助,包括来自国家自然科学基金委员会(NSFC)及中国计算机学会(CCF)等权威机构的支持。这些资助不仅充分肯定了我们研究的科学价值,也为持续推进创新研究提供了坚实的资金保障。

依托跨学科的研究方法与卓越的科研追求,HPML实验室正不断推动高性能机器学习领域的研究边界,力求在理论与应用层面取得突破性进展。

We have a track record of publications in top-tier conferences and journals. Some recent achievements include:

-

April 2025: The paper “SpInfer: Leveraging Low-Level Sparsity for Efficient Large Language Model Inference on GPUs” has received the Best Paper Award of EuroSys 2025. Congratulatios to Mr. Ruibo FAN and all co-authors!

-

March 2025: Two papers “Mitigating Contention in Stream Multiprocessors for Pipelined Mixture of Experts: An SM-Aware Scheduling Approach” and "Mast: Efficient Training of Mixture-of-Experts Transformers with Task Pipelining and Ordering" have been accepted by ICDCS 2025. Congratulatios to Mr. Xinglin PAN and all co-authors! (104 papers were accepted out of 529 submissions)

-

Jan 2025: The paper “SpInfer: Leveraging Low-Level Sparsity for Efficient Large Language Model Inference on GPUs” has been accepted by EuroSys 2025. Congratulatios to Mr. Ruibo FAN and all co-authors! (30 papers were accepted out of 367 new submissions in this Fall Submission round)

-

Jan 2025: Three papers got accepted by ICLR 2025: “STBLLM: Breaking the 1-Bit Barrier with Structured Binary LLMs”, "Hot-pluggable Federated Learning: Bridging General and Personalized FL via Dynamic Selection", and “The Lottery LLM Hypothesis, Rethinking What Abilities Should LLM Compression Preserve?" (Blogpost Track). Congratulations to Mr. Peijie DONG, Dr. Zhenheng TANG, and all co-authors!

-

Dec 2024: The paper “ParZC: Parametric Zero-Cost Proxies for Efficient NAS” has been accepted by AAAI 2025 and selected for oral presentation. Congratulations to Mr. Peijie DONG and all co-authors! (600 oral presentations out of 3032 accepted papers out of 12,957 submissions)

-

Dec 2024: The paper “FSMoE: A Flexible and Scalable Training System for Sparse Mixture-of-Experts Models” has been accepted by ACM ASPLOS 2025. Congratulations to Mr. Xinglin PAN and all co-authors!

-

Sept 2024: The paper “FuseFL: One-Shot Federated Learning through the Lens of Causality with Progressive Model Fusion” has been accepted by NeurIPS 2024 as a spotlight. Two papers “Discovering Sparsity Allocation for Layer-wise Pruning of Large Language Models” and “Should We Really Edit Language Models? On the Evaluation of Edited Language Models” has been accepted by NeurIPS 2024.

- Sept 2024: Two papers “LongGenBench: Long-context Generation Benchmark” and "LPZero: Language Model Zero-cost Proxy Search from Zero" have been accepted by EMNLP Findings 2024.

- July 2024: The paper “3D Question Answering for City Scene Understanding” has been accepted by ACM International Conference on Multimedia 2024.

- July 2024: The paper “Multi-Task Domain Adaptation for Language Grounding with 3D Objects” has been accepted by ECCV 2024.

- May 2024: The paper “BitDistiller: Unleashing the Potential of Sub-4-Bit LLMs via Self-Distillation” has been accepted by ACL 2024, and the paper "Can We Continually Edit Language Models? On the Knowledge Attenuation in Sequential Model Editing" has been accepted by ACL Findings 2024.

- May 2024: The paper “Pruner-Zero: Evolving Symbolic Pruning Metric From Scratch for Large Language Models” was accepted by ICML 2024.

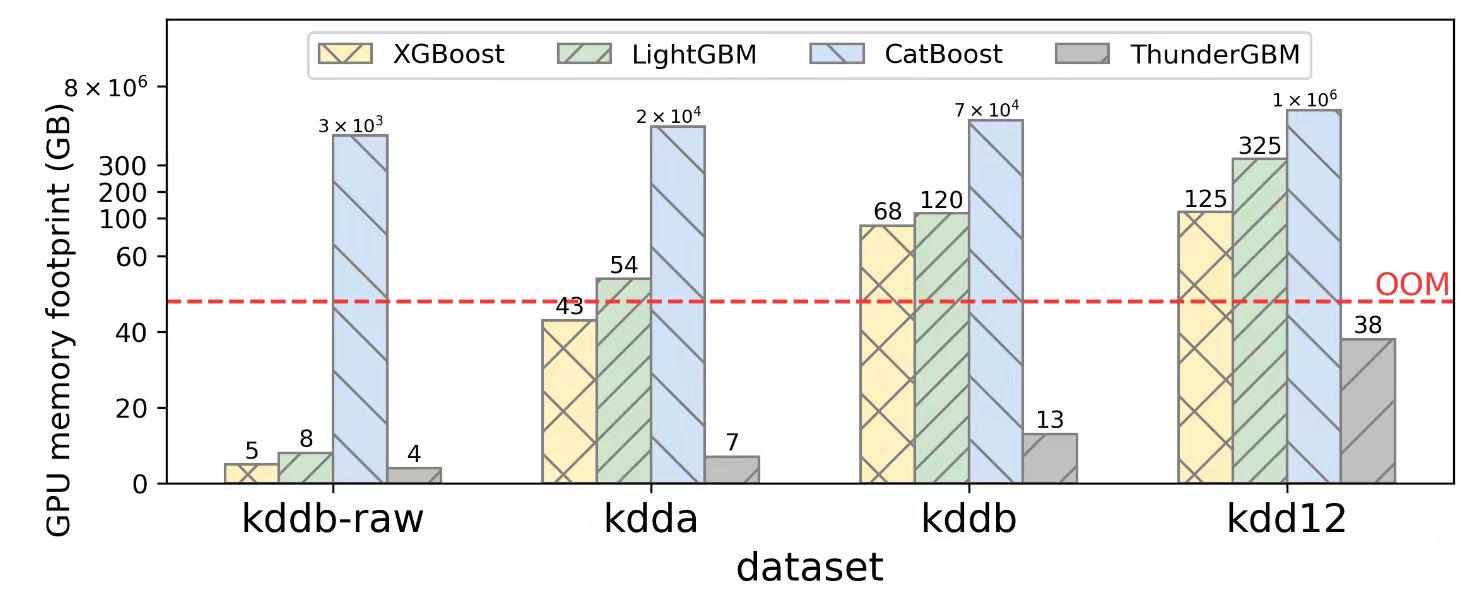

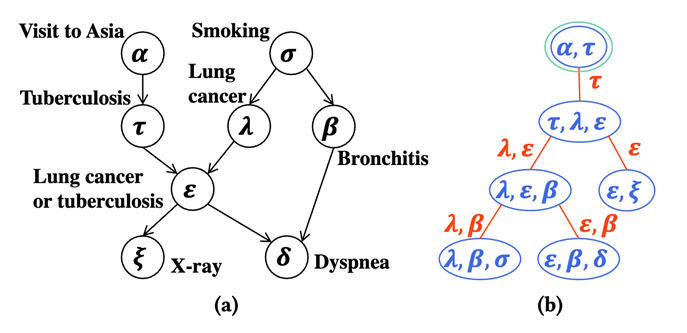

- May 2024: The paper on PGM inference acceleration was accepted by USENIX ATC'24.

- March 2024: The paper “DTC-SpMM: Bridging the Gap in Accelerating General Sparse Matrix Multiplication with Tensor Cores” was accepted by ACM ASPLOS 2024.

- Feb 2024: The paper “FedImpro: Measuring and Improving Client Update in Federated Learning,” has been accepted by ICLR 2024.

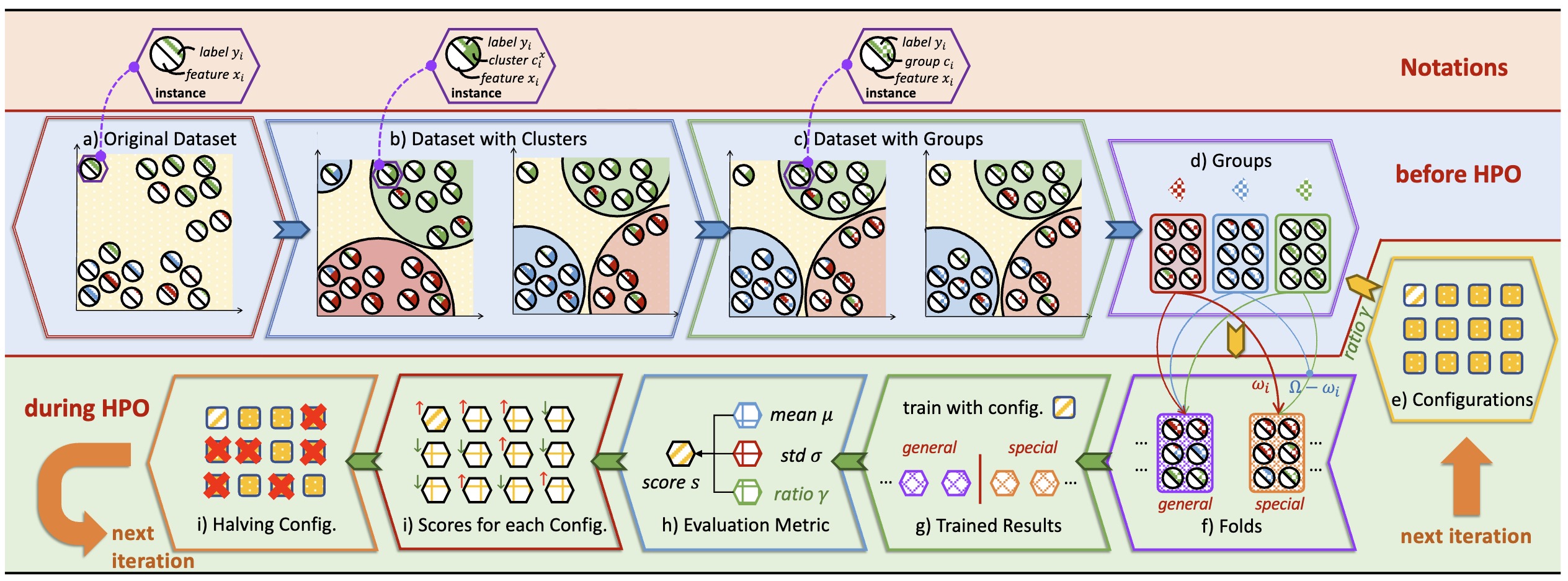

- Feb 2024: The paper on Hyper-parameter Optimization with Adaptive Fidelity was accepted by CVPR'24.

- Feb 2024: The paper “ScheMoE: An Extensible Mixture-of-Experts Distributed Training System with Tasks Scheduling” has been accepted by EuroSys 2024.

- Jan 2024: The paper on Bandit-based Hyperparameter Optimization was accepted by ICDE'24.

- Dec 2023: The paper “Benchmarking and Dissecting the Nvidia Hopper GPU Architecture” has been accepted by IEEE IPDPS 2024.

- Dec 2023: The paper “CF-NeRF: Camera Parameter Free Neural Radiance Fields with Incremental Learning” has been accepted by AAAI 2024.

- Dec 2023: Two papers “Parm: Efficient Training of Large Sparsely-Activated Models with Dedicated Schedules” and "Galaxy: A Resource-Efficient Collaborative Edge AI System for In-situ Transformer Inference" have been accepted by IEEE INFOCOM 2024.

- Nov 2023: The paper “Asteroid: Resource-Efficient Hybrid Pipeline Parallelism for Collaborative DNN Training on Heterogeneous Edge Devices” has been conditionally accepted by ACM Mobicom 2024.

- May 2023: ] The paper “Adaprop: Learning Adaptive Propagation for Graph Neural Network based Knowledge Graph Reasoning” has been accepted by ACM KDD 2023.

- April 2023: Two papers “Evaluation and Optimization of Gradient Compression for Distributed Deep Learning” and “Accelerating Distributed Deep Learning with Fine-Grained All-Reduce Pipelining” have been accepted by IEEE ICDCS 2023.

- March 2023: The paper “Improving Fairness in Coexisting 5G and Wi-Fi Network on Unlicensed Band with URLLC” has been accepted by IEEE/ACM IWQoS 2023.

- Jan 2023: The paper “Fast Sparse GPU Kernels for Accelerated Training of Graph Neural Networks” has been accepted by IEEE IPDPS 2023.

- Dec 2022: The paper “GossipFL: A Decentralized Federated Learning Framework with Sparsified and Adaptive Communication” has been accepted by IEEE TPDS.

- Dec 2022: The paper “PipeMoE: Accelerating Mixture-of-Experts through Adaptive Pipelining” has been accepted by IEEE INFOCOM 2023.

- Nov 2022: Two papers “Rethinking Disparity: A depth range free Multi-View Stereo based on Disparity” and "NAS-LID: Efficient Neural Architecture Search with Local Intrinsic Dimension" have been accepted by AAAI 2023.

- May 2021: The paper “Exploiting Simultaneous Communications to Accelerate Data Parallel Distributed Deep Learning” has received the Best Paper Award of IEEE INFOCOM 2021. A preprint can be found at arXiv.

- April 2021: The paper “BU-Trace: A Permissionless Mobile System for Privacy-Preserving Intelligent Contact Tracing” has received the Best Paper Award of the 2021 International Workshop on Mobile Ubiquitous Systems and Technologies, collated with DASFAA 2021. A preprint can be found at arXiv.

For more information, please visit the homepages of Prof. Xiaowen CHU, Prof. Qiong LUO and 文泽忆 助理教授.

我们现正诚邀有志于高性能机器学习研究的优秀人才加入实验室团队。当前开放的岗位包括博士研究生、博士后及研究助理职位。如果您对该领域充满热情,并希望在充满合作与活力的科研环境中实现自身价值,欢迎加入我们,共同推动技术前沿的发展。