科研项目

Resource Efficient LLM Fine-tuning and Serving

摘要

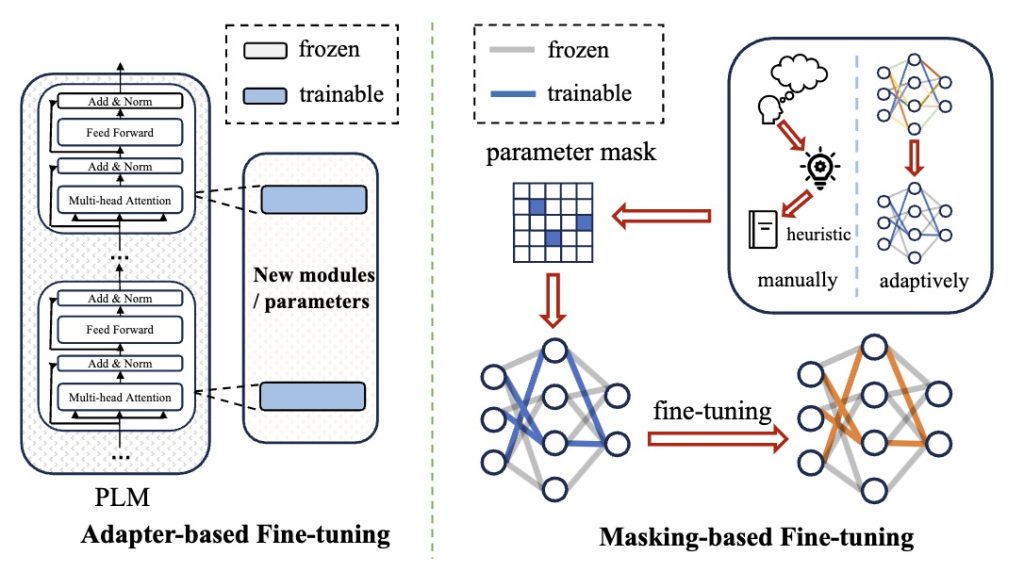

Large Language Models (LLMs) have achieved great success in many real-world applications. However, fine-tuning and serving LLMs require much memory and computing resources. This project aims to develop cutting-edge techniques to improve the resource efficiency of LLM fine-tuning and serving.

项目成员

文泽忆

助理教授

项目周期

2023-Present

研究领域

Data-driven AI & Machine Learning、High-Performance Systems for Data Analytics

关键词

Efficiency, Fine-tuning, Large Language Models, Model Serving