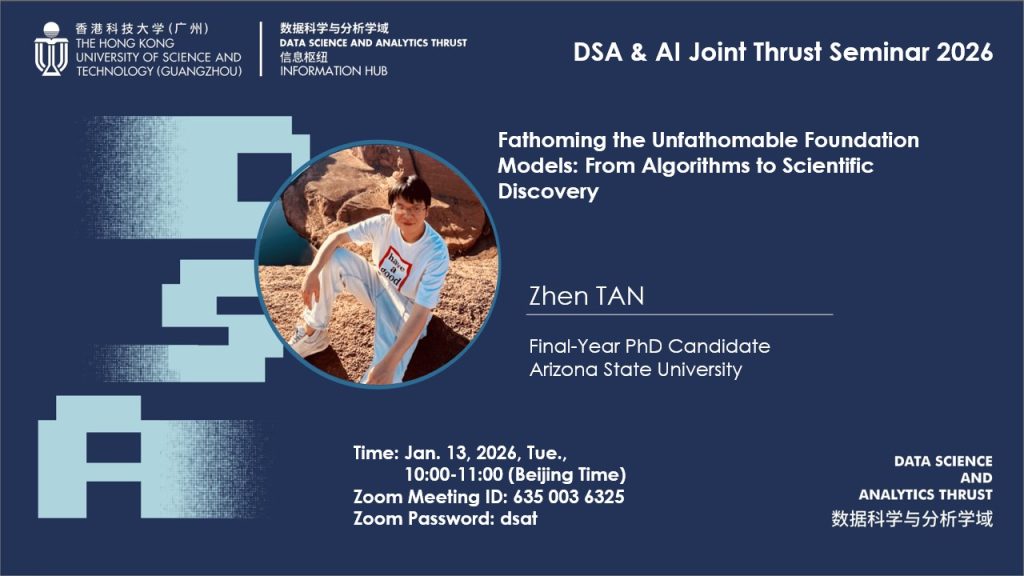

Fathoming the Unfathomable Foundation Models: From Algorithms to Scientific Discovery

ABSTRACT

As foundation models become central to information systems, cybersecurity, and scientific decision-making, their opacity raises critical challenges for trust, accountability, and governance. While much prior work has focused on post-hoc explainability, my research shows that such approaches are intrinsically limited and can even obscure model failures. In this talk, I present a shift from explaining opaque systems to designing trustworthy and interpretable AI by construction. I will introduce diagnostic frameworks that reveal failures in explanation faithfulness and security, such as explanatory inversion and vulnerability to retrieval and communication attacks. I will then demonstrate how concept-based, human-centered model architectures enable glass-box reasoning, actionable interventions, and self-reflection for large language models (LLMs). I will conclude by demonstrating how these ideas translate into real-world impact across AI-driven cybersecurity, conversational agents, agricultural robotics, and neuroscience, and by outlining a vision for information-centric AI systems that are transparent, reliable, and aligned with human values.

SPEAKER BIO

Zhen Tan is a final-year Ph.D. candidate in Computer Science at Arizona State University, advised by Prof. Huan Liu. His research focuses on trustworthy and explainable AI, with an emphasis on understanding and controlling the behavior of large foundation models in information and cyber systems. His work advances interpretability-by-design approaches and diagnostic frameworks for explanation faithfulness, robustness, and security, including defenses against adversarial manipulation of AI models, and interpretability-driven alignment. His research has received multiple paper awards, including Best Paper at PAKDD, and has been published in leading venues such as ACL, EMNLP, NeurIPS, ICML, ICLR, KDD, and AAAI.

Date

13 January 2026

Time

10:00:00 - 11:00:00

Join Link

Zoom Meeting ID: 635 003 6325